English translation of Photographie de synthèse et architecture

Preamble of the english translation: synthesis photography is a specific part of computer-generated imagery (CGI) where the process of taking image is modeled on real photography.

From 1st to 3nd february 2011 in Monaco was held the event for the european 3D simulation and visualization: Imagina. On this occasion, I had the opportunity to make a presentation during the first day of the session dedicated to architecture. My lecture was illustrated with dozens of images and animations. After my speech a short debate took place in the hall on the issue of photorealism in the graphical representation of the architecture including Frédéric Genin, architect and moderator of the session and Bernard Reichen, architect, urbanist and « great witness « of the session.

Foreword

How in the field of graphic representation in architecture, is CGI actually photography in the digital space? We will see some hints from the experience of photographic practice, including to provide meanings, a language to synthesis photography in the graphical representation of architecture. (fig. 00)

Synthesis photography

How is CGI in architecture actually synthesis photography? CGI uses some elements of photography. Like in a photographic studio, there is a scene (fig. 01) shot with: objects (walls, chairs, people, etc.), materials (colors, reflectivity, transparency, richness of detail, etc.); lights (point, parallel, conical, surface, etc.).

There is also camera (fig. 02), often symbolized as such in CGI: body; lens; sensor (film or digital), the size of the output image in CGI. Note that body and lens are not differentiated in CGI.

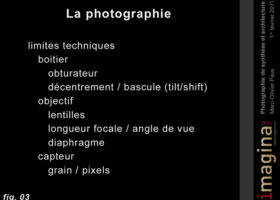

Let’s see what characterizes the photography on the technical side, or rather what the technical limitations of photography are (fig. 03). Body (shutter (eg curtains), exposure time, tilt/shift (light beam are not focused or perpendicular to the sensor)); lens (lens groups more or less complex, focal length/angle of view, diaphragm (amount of light through the lens)); sensor (grain/pixel (sensitivity)).

And then there is of course the triad of exposure (fig. 04), which is more rarely found in computer-generated imagery because it’s usually not considered except by photographers: aperture; exposure time (call wrongly « speed »); sensitivity.

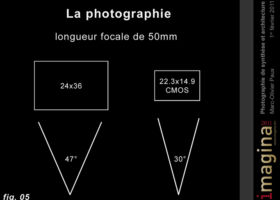

In computer-generated imagery is so found the concept of camera with an angle of view. Sometimes only the focal length is indicated, but the angle of view changes depending on the relative focal length/size of the sensor (or the pixel size of the rendered image) (fig. 05). In fact, only the angle of view is relevant graphical representation; indicating the length seems more referring to the manipulation of a camera but it’s forgetting that working with a 24×36 format sensor no longer makes sense. Already in photography it is not very relevant to work by focal, in computer-generated imagery it, moreover, does not facilitate the work.

We saw that we also find in common scene with objects, materials and lights. But as we can not reproduce the world as a whole and in its details, this time the scene in CGI is generally limited to what we want to photograph without putting more detail than necessary. Of course by taking into account reflections and surfaces needed for indirect lighting, that is to say, when the surfaces themselves become light sources.

But in CGI technical limitations of the physical photograph in a real world do not exist. Meanwhile, these limits have created a meaningful aesthetic. Introducing these limitations in CGI would constitute elements of a language in the synthesis photography. In fact, this is not quite true, since long has the computer-generated imagery introduced the interaction between lens and light beams with lens flare (fig. 06). This optical aberration, which was avoided as much as possible in the real world because it is considered as a fault (fig. 07), was in the computer-generated imagery evidence of programming virtuosity and a popular effect, though often used clumsily to give an impression of reality (fig. 08). That is, precisely, to use a technical limit of material shooting to give the impression that if there are lens flares then a real lens has been used and therefore it is an image of the real world that is given to see. This effect of reality is even pushed to its climax in the movie Star Trek J. J. Abrams (fig. 09): in almost every (real or computer-generated) shot there are flares.

Example of photographic language

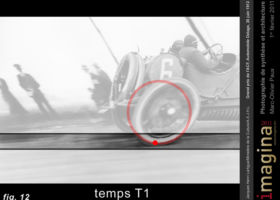

Let’s see an example of a technical limitation of photography as initiator of meaning. Here is a photograph of formula 1 in Monaco Grand Prix in 2009 (fig. 10). We know that a car of this type is fast, but it is difficult, in the image, to read the speed. Not to know how fast it is moving, but only if it is fast or not. Here’s another picture (fig. 11), of Jacques Henri Lartigue, which is about a hundred years old. Although it remains unclear how fast this car was, it certainly ran slower than a formula 1. We can read on this picture an effect of speed. This image expresses the idea of speed. This photograph has long been considered a failure, yet it is very expressive and this expression is precisely the limit of the photographic technology of that time.

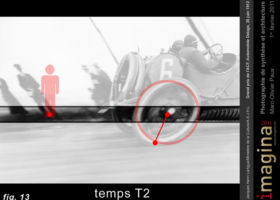

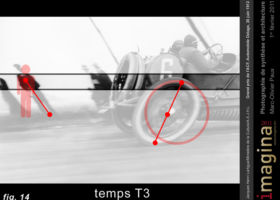

Let me explain: the shutter curtain was horizontal. The slot (fig. 12) between the curtains which let light pass moved from bottom to top. While the curtains moved, the car also moved, it moved to the right (fig. 13). As did also the camera; consequently, the characters in the background (fig. 14) appeared to move to the left. All put together (fig. 11), we have a photograph with an unusual geometry but expressing the idea of speed. Another better-known effect is the motion blur (fig. 15). This effect is easily realizable in computer-generated imagery (fig. 16).

Photorealism

One of the main purposes of CGI in architecture (fig. 17) is to reproduce an image of a future reality. As we only have models of reality primarily as photographs and a scene is generally constructed according to the images that will be made, the computer-generated imagery tends not to realism, but to photorealism. And as CGI allows you to go beyond the limits of reality (for example, it never rains spontaneously in a digital space), it becomes hyperrealism or even of dreams. We went from reality to dream through photography.

Computer-generated image

We have seen from some elements (camera, scene, flare, photorealism) that the computer-generated imagery and its aesthetic are directly inspired by shooting and photographic aesthetic. I mean that if the computer-generated imagery tends to reproduce the physical and especially optical rules of reality it’s to create images that look like photographs from the real world. We approximately recreate the real world in the digital space and we take realistic perspective images, ie photographs.

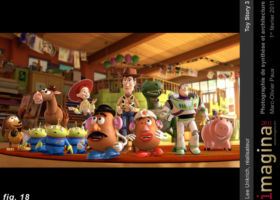

When it is not reduced to photorealism, computer-generated imagery is closerto a more sober, more schematic aesthetic. It resembles to cartoon, animated cartoon. And in recent years, helped by the film industry, it acquires its own aesthetic (see Toy Story (fig. 18), Final Fantasy (even though there is the willingness of realism, it reveals its own aesthetic, a computer-generated one, if we observe more in depth) (fig. 19), Clone Wars (fig. 20), Despicable Me (also nominated for imagina awards) (fig. 21)). We can see that, this aesthetic contains though more ore less realism, is also of high quality and great originality.

To return to photorealism as graphical representation of the architecture, are avoided by computer-generated imagery constraints induced by the technical limitations of the hardware photography (eg. depth of field). Also are avoided the contextual constraints (gravity (eg. much animation resembles a fly), there is never reflexion of the photographer in the mirror) (fig. 22, animation unavailable), no annoying weather (it is always nice and there are more shady side, ie the sun also illuminates the north face)). With the absence of these constraints, it is easy to make hyperrealism. And a fortiori dream images.

I think this is especially due to the fact that the computer-generated imagery in architecture is often seen as an marketing object based on entertainment cinematography and advertising photography. That is to say that the special effects made possible by photorealist computer-generated imagery to cinema can create fantasy worlds that have all the appearance of reality. We are amazed and captivated by these wonderful films. And as the first thing marketing seeks is to seduce, better to propose architectures enhanced by CGI, it’s more saleable. This always leads to beautiful images but by forcing, some images become as common as ads in the laundries (fig. 23 to fig. 34). Computer-generated imagery is therefore rarely used as a tool for dialogue and learning but as a tool at best to make marvel or at worst as a propaganda. On the other hand you probably know the work of the danish architectural firm called BIG which used remarkable real video and computer-generated imagery (fig. 35, animation).

To return to the beautiful sunny images, they can be understood for architectural contest where there is rarely a dialogue between competitors and jury. Or in a sale that promotes products via dreams and symbolic rather than reality. But the architecture does not seem to me to be made of competition and sale, it is most often a dialogue between architect and client, between architect and users (whether public or private), in short, between partners.

We can understand why the « ethics charter of 3D » (fig. 36) signed here last year, has established rules of conduct to especially, I quote, “create computer-generated images or three-dimensional scenes, which are not likely to influence the decision-maker, the client or the public without his/her knowledge” (free translation from French). We see from this the true unease about the possibilities of imagery which the absence of material constraints of the physical world of our reality leads to. There is clearly a desire for truth, at least truthfulness. This question has already by aroused in photography, for example, the space of a room photographed often seems larger than the room itself seen with the naked eye (fig. 37). not to mention the space and optical illusions such as the Ames room (fig. 38) is constructed so as to appear « square » from a unique perspective and distorting the scale ratio between the characters and the play. Yet, in my opinion and not wanting to hurt anyone, it seems naive and wishful thinking wanting the truth of an image solely from the statement of intent, in the solemn commitment of the creator of image, knowing that in the computer-generated imagery all is built and everything is manipulated.

In my opinion, the computer-generated imagery should be taken for what it is: a pure fiction. And hence try to introduce some codification, some language in the creation of computer-generated images. See how to make credible CGI with the intention to show a possible truth to come, a virtual reality as is the architectural project.

Prospects for codification

First, based on photography, several factors must be taken into account. We have already seen two: the flare as an indication of the light source (eg the sun) and the motion blur as an indication of the moving parts of the scene (characters and vehicles in general).

There is also the depth of field (fig. 39) which focuses the look on a part of the scene or exclude an element in foreground and/or background (fig. 40).

Of course there is the shift, typical technique of architectural photography which allows the vertical of a building is as so also in the image by shifting the optical center (fig. 41). However, this vision is idealized compared to the experience of our own vision. Buildings seem out of range despite the presence of markers as characters (fig. 42).

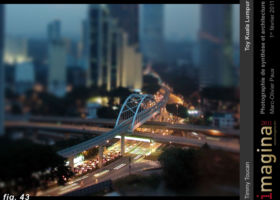

A concomitant technique is the tilt, very trendy in photography, but which permits such as depth of field to focus the look on a part of the image (fig. 43). In addition it gives an effect of model to the landscape since our eye is very close to the object while looking at a model and can only accommodate a small part of it. Is an easily applicable effect in computer-generated imagery (fig. 44 and fig. 45).

Another technique to take into account is the sweep panorama that can show photographed space up to 360° (fig. 46). But with the curves generated and its length, its use is not easy (fig. 47). However, it is used to build interactive objects as we will see later.

There is also a simple one, backing up the point of view and reducing the angle of view. In real photography, walls often prevent the photographer to back up. To shoot the space he/she must use a wide-angle lens field, which publishes the largest space that is as we have seen and also to introduce perspective effects which do not correspond to experience of our vision (fig. 48). But in CGI, nothing prevents the back point of view because the walls are no longer an obstacle (fig. 40).

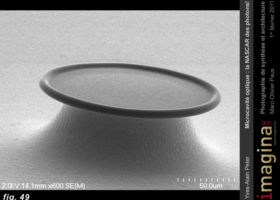

Based on scientific photography, a scale reference (fig. 49) may be introduced into synthesis photography. While a photograph is a perspective and that, by definition, we can not identify the dimensions as accurately as on a map, a reference allows the viewer to make a better idea of the size of a building. When photography was still considered a servant of the arts, scale references were found in architectural photography (fig. 50). For example, cane leaning against a pillar (fig. 51) or, more explicitly, a graduated ruler in a ruin (fig. 52), as is done elsewhere in archeology (fig. 53). Perhaps could be explored as well renderings in « false colors ».

Then of course you can add characters (fig. 54). This is a common element. There are even a lot more characters in computer-generated imagery than in real photography. But it is difficult to set up; at first place, because they are often implemented in post-production stage and their scale has an arbitrary ratio with that of the architecture shown and secondly because the characters can grab the gaze to the detriment of architecture. There is the solution as to make them ghostly (fig. 55), but in a photorealistic image, it is sometimes strange. The characters are also used in animation with the enhanced difficulty of managing their temporality (fig. 56, animation unavailable). It is certain that the issue of the characters in the graphical representation of the architecture, especially in the real photography and in the synthesis one is a broad topic that goes far beyond the scope of my intervention.

When perspective image is presented, the difficulty is to place it in the architectural work. If we take the example of certain video games a map is often integrated where it is necessary that the player be located, (fig. 57 and fig. 58). In the representation of the architecture it would be rather welcome that this type of indication appears more often to enable the viewer to be in built space (fig. 59 and fig. 60). At least that north is indicated.

A very interesting element coming from the computer-generated imagery is ambient occlusion (fig. 61). This is a process that aims to darken the corners of a scene, that is to say, the angles formed by the different objects in a scene. This process simulates the fact that an surface receives less light in the zones close to other surfaces. Yet by this method, the scene acquires an uniform brightness while revealing better three-dimensionality of space than does a outlines rendering. Like a cardboard or plaster model, colors and textures of materials are neutralized to better reveal the spatial relationship (fig. 62).

Finally, with the development of mobile Internet access, it would be interesting to introduce more interactivity in the graphical representation of architecture, even if interactivity is not printed on glossy paper and its production is more complex and more expensive. The simplest interaction is the animation with cursor control. For example, the representation of the solar race, basic project planning tool, can be viewed either on a non-photorealistic representation (fig. 63, animation unavailable) or a photorealistic representation (fig. 64, animation unavailable). What’s interesting is to « walk » in during a daytime to understand « the masterly, correct and magnificent play of masses brought together in light. »

Speaking of promenade, with panoramic images it is possible to build interactive objects that do not require sophisticated technology as may be required by a virtual immersion. It is the QTVR, acronym for QuickTime Virtual Reality. It is a technology that is some fifteen years old and which is not always easy to implement, but, in my opinion, it is still valid for the graphical representation of architecture (fig. 65, animation unavailable). Of course, you can also have these walks on the basis of a 360° video consisting of a series of spherical panoramas. It is simply a test of yellow Bird (fig. 66, animation no longer available), a dutch company that applies the concept of QTVR to real video (fig. 67, animation no longer available).

Here, I presented my vision of synthesis photography applied to the graphical representation of architecture and its potential in terms of communication. I thank you for your attention.

Imagina, Monaco, 1st of february 2011